From a USC study earlier this year:

What we pay for medicines today affects the number and kinds of drugs discovered tomorrow. Empirical research has established that drug development activity is sensitive to expected future revenues in the market for those drugs. The most recent evidence suggests that it takes $2.5 billion in additional drug revenue to spur one new drug approval, based on data from 1997 to 2007.

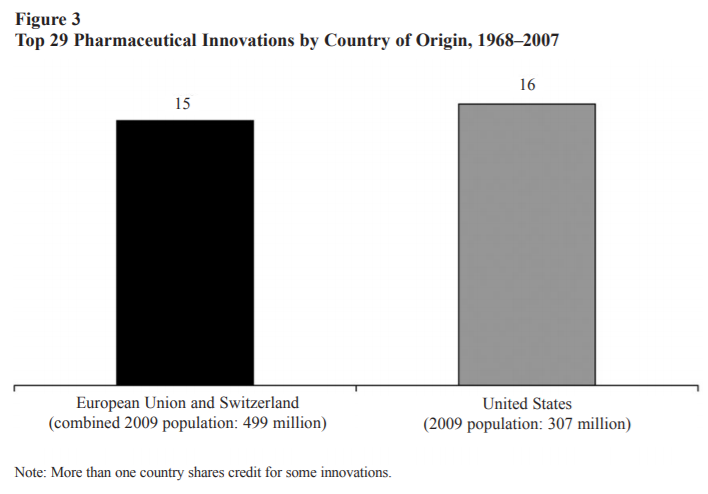

Another study assesses the Orphan Drug Act, passed in 1982 to stimulate development of treatments for rare diseases. Its key feature was the granting of market exclusivity that would restrict entry by competitors— in other words, allow for higher prices. The result was a dramatic increase in the number of compounds brought into development to treat rare diseases (figure 3). This linkage may not help patients with tuberculosis today in Nigeria and Indonesia — two poor countries hardest hit by tuberculosis—but it is currently benefiting patients in the same countries who have HIV. Decades ago, demand for HIV treatment in wealthy countries spurred medical breakthroughs that have since found their way — albeit more slowly than we would like — into the poorest corners of the globe. As of July 2017, 20.9 million people living with HIV were accessing antiretroviral therapy globally; 60 percent of them live in eastern and southern Africa.

American consumers may feel some philanthropic pride about the benefits they have spurred for the world’s poorest HIV patients. But similar benefits are also enjoyed by German, British, and French HIV patients, and were financed by the same revenues generated, in large part, by high American drug prices. Whether one sees this as philanthropy on the part of American drug buyers, or free-riding on the part of other wealthy countries who pay much less for the same drugs, America clearly contributes more to pharmaceutical revenue, and hence incentives for new drug development, than its income and population size would suggest (pg. 2).

The study goes on to point out that Americans pay 3 times as much on drugs as Europeans and 90% more as a share of income. American consumers “account for about 64 to 78 percent of total pharmaceutical profits, despite accounting for only 27 percent of global income…American patients use newer drugs and face higher prices than patients in other countries” (pg. 2-3). Most policy discussions focus on lowering American prices. But what if we instead raised European prices? The researchers write,

Increasing European prices by 20 percent — just part of the total gap — would result in substantially more drug discovery worldwide, assuming that the marginal impact of additional investments is constant. These new drugs lead to higher-quality and longer lives that benefit everyone. After accounting for the value of these health gains — and netting out the extra spending — such a European price increase would lead to $10 trillion in welfare gains for Americans over the next 50 years. But Europeans would also be better off in the long run, by $7.5 trillion, weighted towards future generations. This is because European populations are rapidly aging, and they need new drugs too…At the end of the day…evidence conclusively demonstrates that higher expected revenues leads to more drug discovery, with the most recent numbers suggesting that on average every $2.5 billion of additional revenue leads to a new drug approval (pg. 4).

In short, “if other wealthy countries shouldered more of the burden for medical innovation, both American and European patients would benefit” (pg. 5). Recent research confirms that the difference between U.S. and European healthcare is driven mainly by prices. The authors suggest that more can be done through trade, international harmonization of regulatory standards, and further research on the costs of free-riding. “As incomes in less-developed countries rise,” they conclude, “they will face the challenges of fighting conditions like diabetes, heart disease, and even dementia. Spending a bit more now to ensure their populations have access to effective treatment is in everyone’s interest (pg. 5).

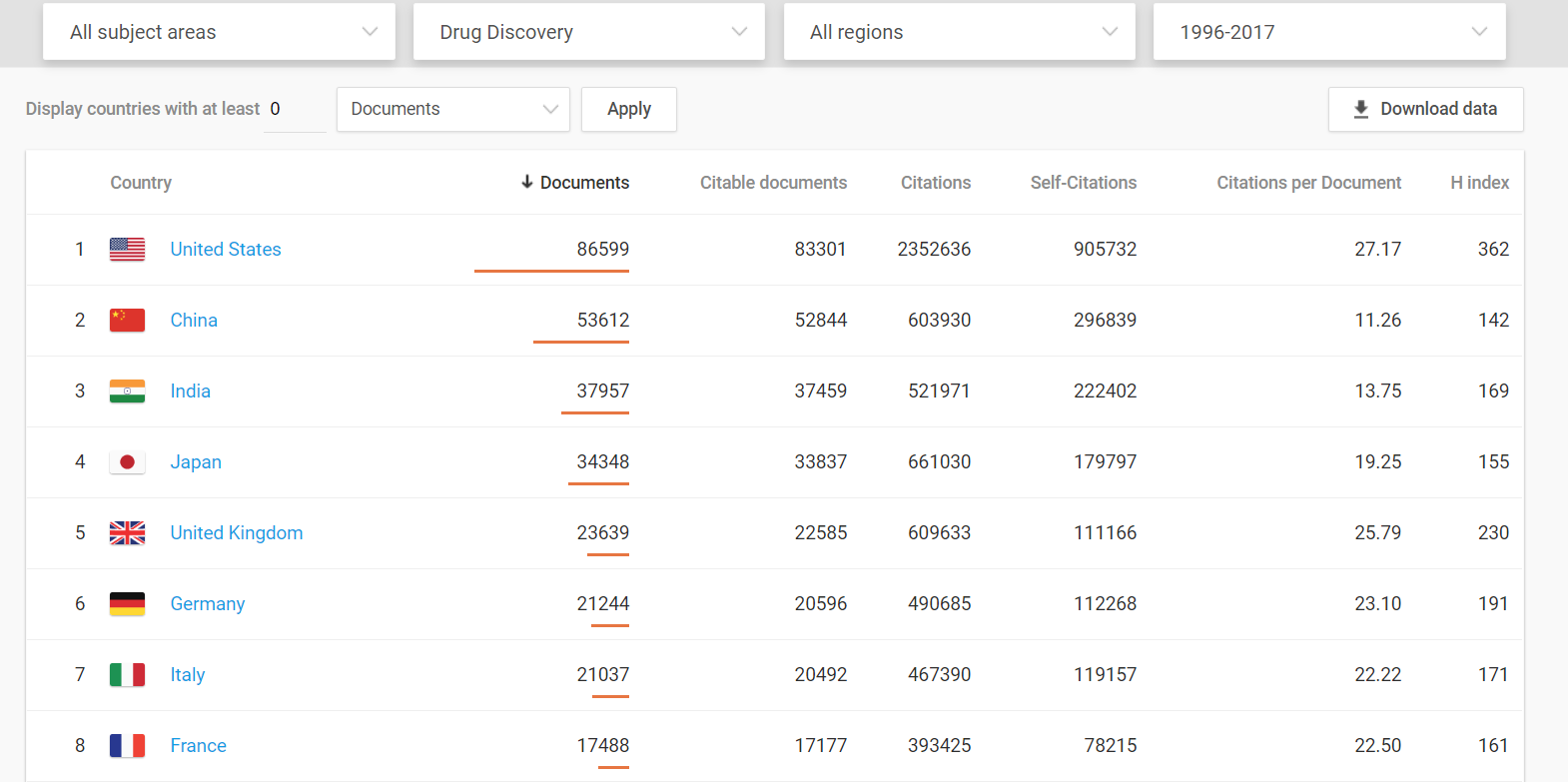

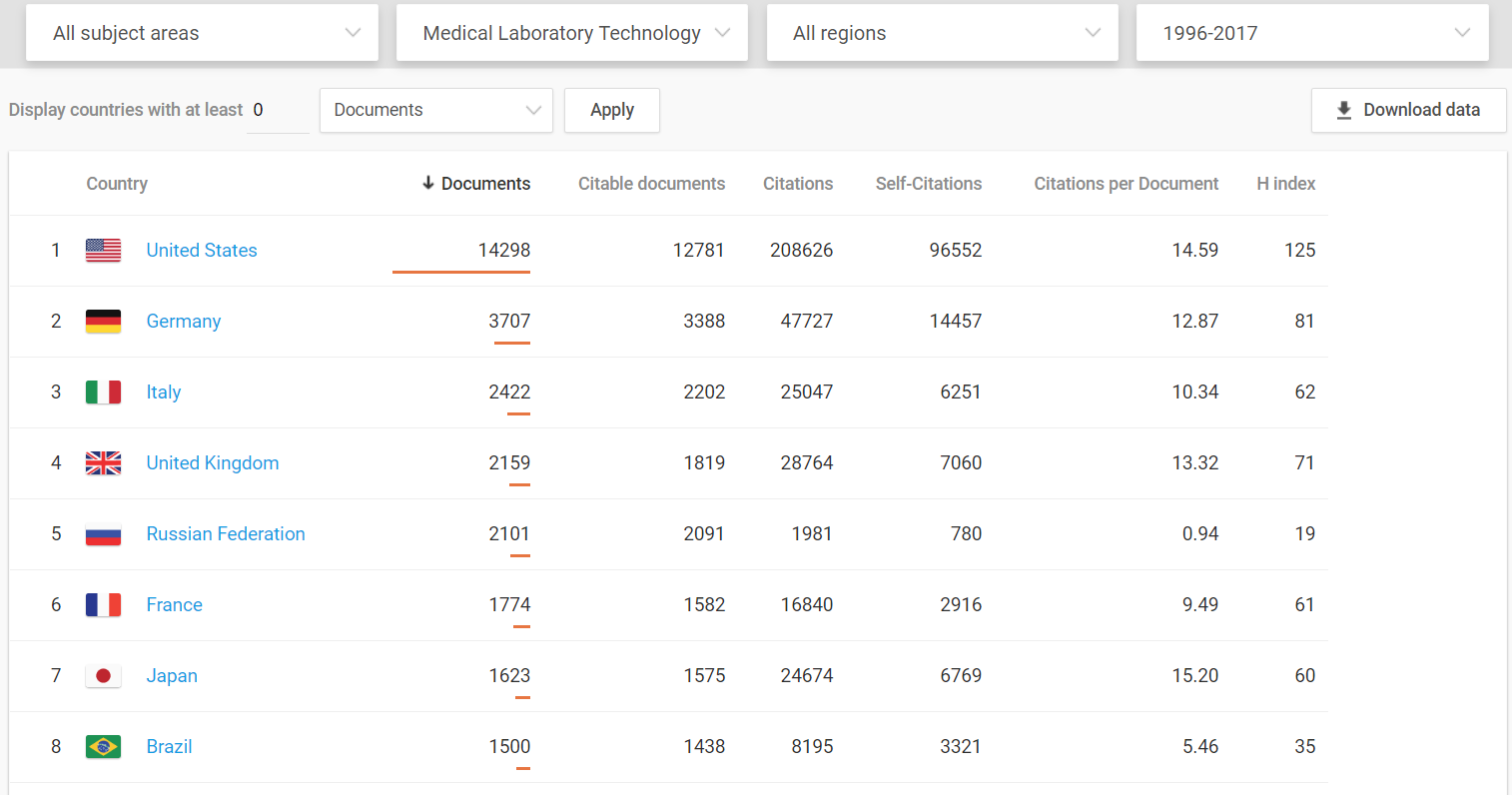

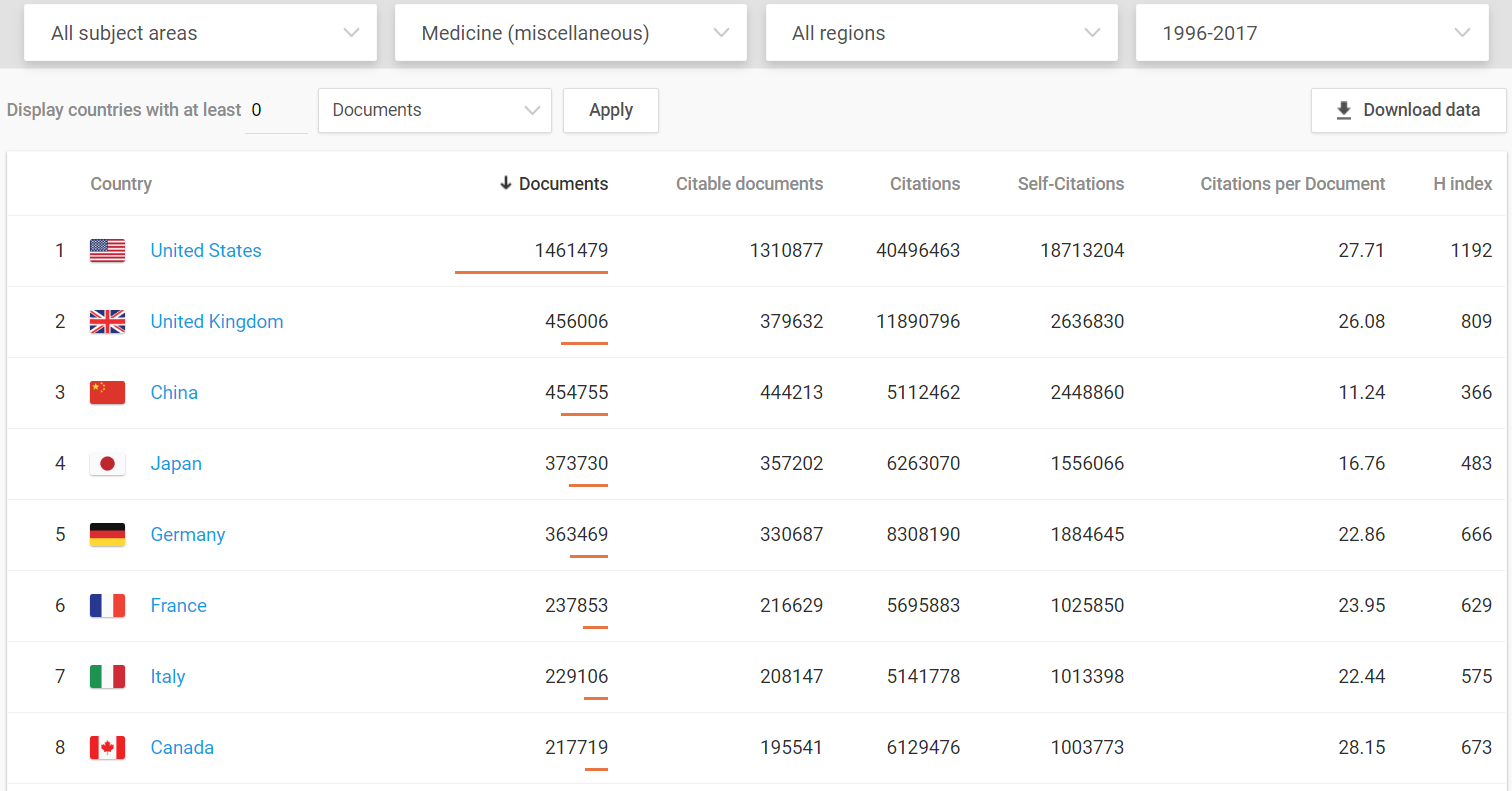

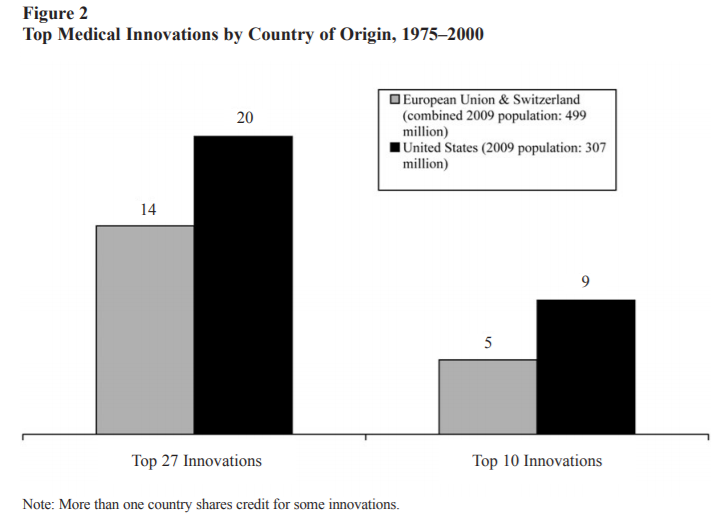

Medical innovations are vital to increased well-being and the U.S. dominates in this arena.

Even when compared to the European Union as a whole, the United States still comes out on top.

As the authors of the two graphs above explain,

Although many factors are surely relevant, one likely contributor is differences in monetary compensation. Other things being equal, individuals and firms will tend to invest more in medical innovation when (a) they expect a larger return; (b) the returns will last for a longer period of time; and (c) the returns arrive sooner rather than later…Americans pay more for pharmaceuticals because of the nature of our health care system. Single-payer and other centrally organized health care systems, like those in much of Europe, are characterized by a great deal of monopsony (buyer) power that pushes down compensation…In addition to pushing down prices, centrally organized health care systems also limit the use of new drugs, technologies, and procedures. Those systems “control costs by upstream limits on physician supply and specialization, technology diffusion, capital expenditures, hospital budgets, and professional fees.” The result is that those countries use new innovations less extensively than the United States (pg. 8).

They conclude (and I agree), “The healthcare debate should address more than just covering the uninsured and controlling costs. It should also consider whether proposed policies will promote or hinder the ability of creative individuals to innovate” (pg. 11).