A recent working paper looks at the effects of India’s 1986 anti-child labor law. Once again, good intentions and actual outcomes are at odds with one another:

The estimated effect of the ban is to increase relative employment among children under the age of 14. Having an underage sibling leads to a 0.3 percentage point increase in the likelihood of engaging in work after the ban for the very young. While this point estimate is small, it is both statistically and economically significant; the pre-ban proportion of children employed in that age range is only 2 percent so the effect of the ban is to increase employment by 15% over the mean for this group. The ban increases the probability of employment by 0.8 percentage points (5.6% over the mean) for young children ages 10-13. However, older children ages 14-17 overall are unaffected by the ban. The effect for this group is both small relative to the mean and statistically insignificant. Again, the largest increase in child labor is in agriculture…which is consistent with the partial mobility case of the two-sector model where there is restricted entry into manufacturing (pg. 22).

The authors then look at five measures of household welfare:

- Per capita expenditure.

- Per capita food expenditure.

- Caloric intake per capita.

- Staple share of calories; i.e., “a measure of household nutritional adequacy in the presence of caloric needs that are unknown or variable across households. [The] logic is that if households attach a high disutility to having caloric intake below caloric needs, they will substitute towards the cheapest sources of calories (staples)” (pg. 25).

- Household index asset; i.e., “a set of variables that capture the quality and quantity of housing, the type of energy used for cooking and lighting, and the quantity of electricity used (which is likely to be correlated with the number of appliances and durables used by the household” (pg. 25).

Their findings?

We find a negative and statistically significant point estimate of the ban’s effect on four out of five welfare measures. The one exception is caloric intake per capita which has a positive but not statistically significant coefficient. This is consistent with households near-subsistence – the ones likely to be most affected by the ban – being unable to cut back on calories and instead reducing other aspects of household welfare (consuming more less tasty staples or selling assets) as well as the idea that increased child labor for these households may increase household caloric requirements and thereby constrain households from adjustment on this margin. However the changes for all of the welfare measures are quantitatively small – about 0.01 standard deviations of the pre-ban cross-section – and the standard errors are small enough to rule out large positive or negative effects of the ban (pg. 26).

Nonetheless, “we take this as evidence that the ban makes these households unambiguously worse off” (pg. 5). They conclude,

This paper is the first empirical investigation of the impact of India’s most important legal action against child labor. While the Child Labor (Prohibition and Regulation) Act of 1986 prevented employers from employing children in certain sectors and increased regulation of child labor in non-family run businesses, the net result of this ban appears to be an increase in child labor in some families. We find that child wages decrease in response to such laws and poor families send out more children into the workforce. Due to increased employment, affected children are less likely to be in school. These results are consistent with a two sector model with some frictions on mobility across sectors where the ban is more stringently enforced in one sector than the other. Importantly, we also examine the overall welfare effects of the ban on households. Along various measures of household consumption and expenditure, we find that the ban leads to small decreases in household welfare.

This paper does not intend to suggest that all child labor bans are useless. In fact, well formulated and implemented bans could absolutely help in eliminating child labor; but as we do in this case, research would have to examine how a decrease in child labor affects child and household welfare (Baland and Robinson (2000); Beegle, Dehejia and Gatti (2009)). To echo the reasoning in Basu (2004): “Legal interventions, on the other hand, even when they are properly enforced so that they do diminish child labor, may or may not increase child welfare. This is one of the most important lessons that modern economics has taught us and is something that often eludes the policy maker” (pg. 30).

This isn’t all that surprising. Consider Paul Krugman:

In 1993, child workers in Bangladesh were found to be producing clothing for Wal-Mart, and Senator Tom Harkin proposed legislation banning imports from countries employing underage workers. The direct result was that Bangladeshi textile factories stopped employing children. But did the children go back to school? Did they return to happy homes? Not according to Oxfam, which found that the displaced child workers ended up in even worse jobs, or on the streets–and that a significant number were forced into prostitution.

The 1997 UNICEF State of the World’s Children report had similar findings:

The consequences for the dismissed children and their parents were not anticipated. The children may have been freed, but at the same time they were trapped in a harsh environment with no skills, little or no education, and precious few alternatives. Schools were either inaccessible, useless or costly. A series of follow-up visits by UNICEF, local non-governmental organizations (NGOs) and the International Labour Organization (ILO) discovered that children went looking for new sources of income, and found them in work such as stone-crushing, street hustling and prostitution — all of them more hazardous and exploitative than garment production. In several cases, the mothers of dismissed children had to leave their jobs in order to look after their children.

In cases like this, legislation is rarely the answer. In fact, according to economist Robert Whaples,

Most economic historians conclude that…legislation was not the primary reason for the reduction and virtual elimination of child labor between 1880 and 1940 [in the United States]. Instead they point out that industrialization and economic growth brought rising incomes, which allowed parents the luxury of keeping their children out of the work force. In addition, child labor rates have been linked to the expansion of schooling, high rates of return from education, and a decrease in the demand for child labor due to technological changes which increased the skills required in some jobs and allowed machines to take jobs previously filled by children. Moehling (1999) finds that the employment rate of 13-year olds around the beginning of the twentieth century did decline in states that enacted age minimums of 14, but so did the rates for 13-year olds not covered by the restrictions. Overall she finds that state laws are linked to only a small fraction – if any – of the decline in child labor. It may be that states experiencing declines were therefore more likely to pass legislation, which was largely symbolic.[ref]This is true of most sweatshop conditions.[/ref]

The road to hell and all that.

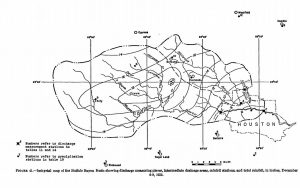

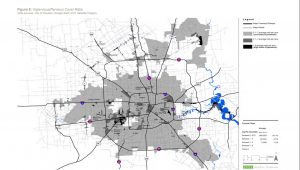

the following map of the Houston storm’s effects, showing unsettling similarities to what we just witnessed (note that this map does not include the areas to the north of town, where rainfall in 1935 was significantly higher. These are the suburbs that flooded along Cypress and Spring Creeks last weekend and the farmland that similarly flooded in 1935)

the following map of the Houston storm’s effects, showing unsettling similarities to what we just witnessed (note that this map does not include the areas to the north of town, where rainfall in 1935 was significantly higher. These are the suburbs that flooded along Cypress and Spring Creeks last weekend and the farmland that similarly flooded in 1935)

As mentioned

As mentioned

In Scotland, students exercised complete consumer control over with whom they studied and which subjects they deemed relevant. Oxford—and in fact most other European universities—employed a system similar to the way that American universities handle tuition payments today: One tuition payment was made directly to the university, and the university decided how to distribute what came in…Smith points out how [Oxford] often fell short of the Scottish system, where direct payment of fees served as motivation for faculty responsibility. “The endowments of [British] schools and colleges have necessarily diminished more or less the necessity of application in the teachers,” Smith writes in his opening sally against bundling the costs of education. “In the university of Oxford, the greater part of the publick professors have, for these many years, given up altogether even the pretence of teaching.” In the the Scottish system, “the salary makes but a part, and frequently but a small part of the emoluments of the teacher, of which the greater part arises from the honoraries or fees of his pupils,” he explains.

In Scotland, students exercised complete consumer control over with whom they studied and which subjects they deemed relevant. Oxford—and in fact most other European universities—employed a system similar to the way that American universities handle tuition payments today: One tuition payment was made directly to the university, and the university decided how to distribute what came in…Smith points out how [Oxford] often fell short of the Scottish system, where direct payment of fees served as motivation for faculty responsibility. “The endowments of [British] schools and colleges have necessarily diminished more or less the necessity of application in the teachers,” Smith writes in his opening sally against bundling the costs of education. “In the university of Oxford, the greater part of the publick professors have, for these many years, given up altogether even the pretence of teaching.” In the the Scottish system, “the salary makes but a part, and frequently but a small part of the emoluments of the teacher, of which the greater part arises from the honoraries or fees of his pupils,” he explains.