The list of fairly big-name outlets covering the 2015 Hugos / Sad Puppies controversy has gotten pretty long[ref]Slate, Salon, Entertainment Weekly, the Guardian, the Telegraph, the Daily Dot, i09 along with Breitbart (twice) and the National Review[/ref], but here’s how you know this is Officially a Big Deal: George R. R. Martin has been in a semi-polite back-and-forth blog argument with the Larry Correia for days. That’s thousands and thousands of words that Mr. Martin has written about this that he could have spent, you know, finishing up the next Game of Thrones book. I think we can officially declare at this point that we have a national crisis.

Martin’s blog posts are a good place to start because his main point thus far has been to rebut the central claim that animates Sad Puppies. To wit: they claim that in recent years the Hugo awards have become increasingly dominated by an insular clique that puts ideological conformity and social back-scratching ahead of merit. While the more shrill voices within the targeted insular clique have responded that Sad Puppies are bunch of racist, sexist bigots, Martin’s more moderate reply has been: Where’s the Beef? Show me some evidence of this cliquish behavior. Larry Correia has responded here.

As these heavyweights have been trading expert opinion, personal stories, and plain old anecdotes, it just so happens that I spent a good portion of the weekend digging into the data to see if I could find any objective evidence for or against the Sad Puppy assertions. It’s been an illuminating experience for me, and I want to share some of what I learned. Let me get in a major caveat up front, however. There’s some interesting data in this blog post, but not enough to conclusively prove the case for or against Sad Puppies. I’m running with it anyway because I hope it will help inform the debate, but this is a blog post, not a submission to Nature. Calibrate your expectations accordingly.

One additional note: unless otherwise state the Hugo categories that I looked into were the literary awards for best novel, novella, novelette, and short story. There are many more Hugo categories (for film, graphic novel, fan writer, etc.) but the literary awards are the most prestigious and also have the most reliable data (since a lot of the other categories come and go.)

Finding 1: Sad Puppies vs. Rabid Puppies

I have been following Sad Puppies off and on since Sad Puppies 2. SP2 was led by Larry Correia, and his basic goal was to prove that if you got an openly conservative author on the Hugo ballot, then the reigning clique would be enraged. For the most part, he proved his case, although the issue was muddied somewhat by the inclusion of Vox Day on the SP2 slate. Vox Day tends to make everyone enraged (as far as I can tell), and so his presence distorted the results somewhat.

This year Brad Torgersen took over for Sad Puppies 3 with a different agenda. Instead of simply provoking the powers that be, his aim was to break its dominance over the awards by appealing to the middle. For that reason, he went out of his way to include diverse writers on the SP3 slate, including not only conservatives and libertarians, but also liberals, communists, and apolitical writers. Even many leading critics of the Sad Puppies (for instance John Scalzi[ref]”I’m feeling increasingly sorry for the nominees on the Hugo award ballot who showed up on either Puppy slate but who aren’t card-carrying Puppies themselves, since they are having to deal with an immense amount of splashback not of their own making.” from Human Shields, Cabals and Poster Boys[/ref] and Teresa Nielsen Hayden[ref]”Indications are that a fair number of them [nominees on the Sad Puppy slate who got onto the ballot], maybe a majority, are respectable members of the SF community who, for one reason or another, are approved of by the SPs while not being ideologically Sad Puppies themselves.” from this comment on her post Distant thunder, and the smell of ozone.[/ref]) concede that several of the individuals on the Sad Puppies slate were not politically aligned with Sad Puppies. That fact was my favorite part about Sad Puppies: the attempt to reach outside their ideological borders demonstrated an authentic desire to depoliticize the Hugos instead of just claiming them for a new political in-group.

What I didn’t know until the finalists were announced just this month is that the notorious Vox Day had created his own slate: Rabid Puppies. Rather than angling toward the middle (like Torgersen), Day’s combative and hostile approach kept Rabid Puppies distinctly on the fringe. To give you a sense of the level of animosity here, several folks agreed to be on the Sad Puppies slate only on the condition that Vox Day was not. Despite this animosity and the very different tones, when it came time to pick a slate, Vox Day basically copied the SP3 suggestions and then added a few additional writers (mostly from his own publishing house) to get a full slate.[ref]There are 5 finalists per category. SP3 didn’t propose a full slate: they had less than 5 nominees for several categories. RP ran a full slate.[/ref]

Because Torgersen and Correia are more prominent, when I did learn about RP I assumed it was a minor act riding on the coattails of Sad Puppies 3 and little more. For this reason, I was frustrated when the critics of Sad Puppies tended to conflate Torgersen’s moderate-targeted SP3 with Vox Day’s fringe-based RP. But then I started looking at the numbers, and they tell a different story.

The Sad Puppies 3 campaign managed to get 14 of their 17 recommended nominees through to become finalists, for a success rate of 82.4%. Meanwhile, the Rapid Puppies managed to get 18 or 19 of their 20 recommendations through for a success rate of 90-95%.[ref]Larry Correia made it onto the slate but turned his position down. If the person who took his spot came from the non-RP authors, it means that the RP slate was initially 95% successful. If it was taken by another RP author who didn’t make the first cut then their rate success rate was 90%.[/ref]

What’s more, however, there was one category where the SP3 and RP slates conflicted: the Short Story category. Here’s how those results ended up:

| Author | Source | Result? |

| Annie Bellet | Both | Success |

| Kary English | Both | Success |

| Steve Rzasa | RP | Success |

| John C. Wright | RP | Success |

| Lou Antonelli | RP | Success |

| Megan Grey | SP3 | Failure |

| Steve Diamond | SP3 | Failure |

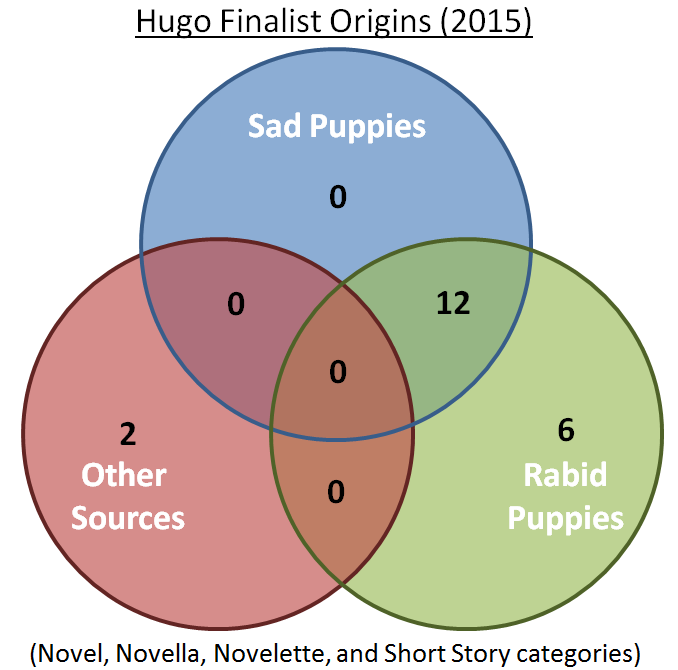

In other words, whene SP3 and RP actually went head-to-head, Rabid Puppies beat SP3. It appears as though in term of raw voting power, the Rabid Puppies voters outgunned the Sad Pupppies 3 voters. I put together a simple Venn Diagram that hammers that point home by showing where each of the 20 Hugo finalists came from:

If you want to know where the finalists come from, it looks like Rabid Puppies can’t possibly be ignored. For someone like me who really supported the moderate, inclusive aims of Sad Puppies 3, this is a sobering realization.

Finding 2: Gender in Sci-Fi

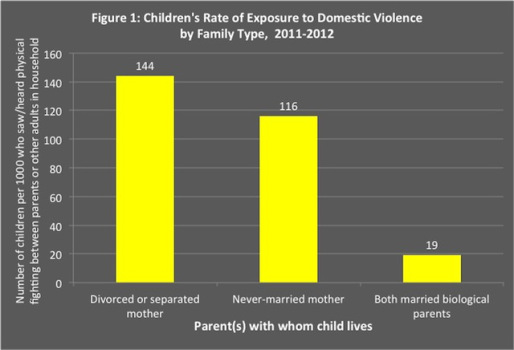

I put together a table of all the Hugo nominees and winners with their gender. I know that gender isn’t the only diversity issue but it’s the easiest one to find data on. Here’s what I found:

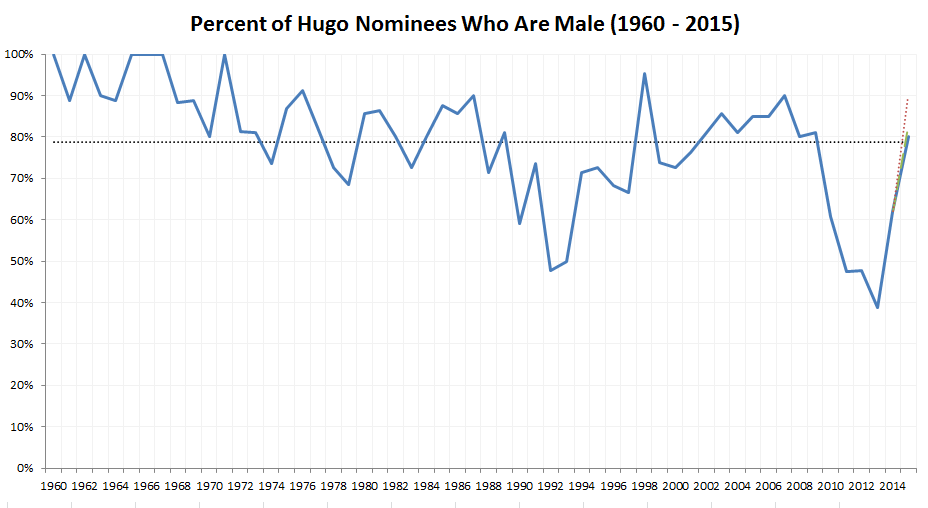

It is easy to see how a social justice advocate would interpret this chart. In the 1960s the patriarchy reigned supreme and often 100% of Hugo nominees were male. As the sci-fi community grew more mature and progressive, however, the patriarchy’s grip weakened. More and more female nominees entered the scene. But now SP3 and RP have rolled back all that progress, and as a result the 2015 finalists are right back at the status quo: the dotted line representing about 80% male nominees on average over the entire 1960 – 2015 period. It’s a simple story: SP3 and RP are agents of the patriarchy sent to re-establish the status-quo. If you want to know why so many social just advocates are very, very angry about SP3 and RP, this is why.

But there are some serious complications to this narrative. First, the diversity of the early 2010s was not unprecedented. There wasn’t a long, slow, continuous growth of diversity. There were a lot of female nominees in the early 1990s, and this gets omitted from articles that act as though sci-fi had achieved some milestones of diversity for the first time. It’s true that the 2010s were the best yet, but the most important symbolic line was crossed way back in 1992 when 52% (more than half) of the nominees were women. Second, the rebound towards the overall average started last year, not with the 2015 finalists. In 2013 there was an all-time record percentage of female finalists (61%) but in 2014 the numbers had flipped and 62% of the finalists were male. Although Sad Puppies 2 did exist in 2014, it had very little impact and so the rebound towards the status quo cannot reasonably be blamed entirely on SP3 / RP.

While we’re at it, it’s important to note that neither SP3 nor RP were 100% male (as has been widely and erroneously reported[ref]Most notably by EW, although you really need to read the original version (prior to threats of libel and numerous corrections and edits) with the original headline of Hugo Award nominations fall victim to misogynistic, racist voting campaign to get the full effect.[/ref]). Those little green and red lines at the very end of the chart show what the gender ratio would have looked like if SP3 had won completely (82.4%, the green line) or if RP had won completely (90%, the red line).

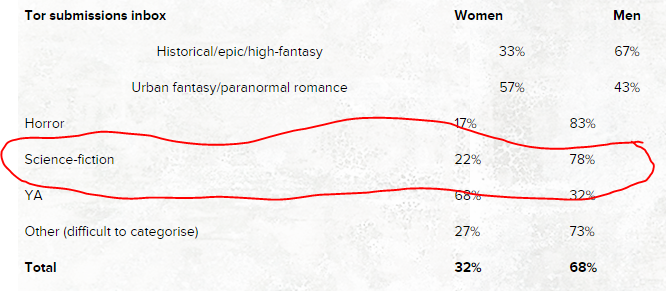

But the fourth complication is by far the most important one. Back in 2013 a Tor UK editor actually divulged the gender breakdown of the submissions they receive by genre.

So, over the history of the Hugo awards from 1960 – 2015, 79% of the nominees have been male. In 2013, 78% of the folks submitting sci-fi to Tor UK were male.

There were a lot of very angry reactions to this post. For example, “I find this article disappointing, ignorant, and damaging,” starts one response which I found from a current Damien Walter blog post. It’s hard to see why an article that basically just presented factual information would be reviled, especially when the article concludes:

As a female editor it would be great to support female authors and get more of them on the list. BUT they will be judged exactly the same way as every script that comes into our in-boxes. Not by gender, but how well they write, how engaging the story is, how well-rounded the characters are, how much we love it.

This is an entirely moderate, reasonable position to take. Science fiction has been called “the literature of ideas” by sci-fi legend Pamela Sargent. And in a genre where ideas are paramount, so is diversity. Diversity is not an intrinsically liberal value. After all, conservatives are the ones who tend to believe in gender essentialism, which would necessarily underscore the importance of having female viewpoints since (if gender essentialism holds), female viewpoints are inherently different than male viewpoints in at least some regards, and thus you will get more perspectives if you include women as well as men. Thus: conservatives can be just as invested in welcoming women into the genre as writers and as fans.

But if you have a situation where men and women are equally talented writers and where men outnumber women 4 to 1 and where the Hugo awards do a good job of reflecting talent, then 80% of the awards going to men is not evidence that the awards are biased or oppressive. It is evidence that they are fair. In that scenario, 80% male nominees is not an outrage. It’s the expected outcome.

Of course this just raises the next question: why is it that men outnumber women 4:1 in science fiction? For that matter, why do women outnumber men 2:1 in the YA category? Why is it that only the urban fantasy / paranormal romance category is anywhere close to parity? These are all fascinating questions and also important questions. I believe we can only hope to address them in an open-ended conversation. This is my primary concern with social justice advocates. Because they are tied to a certain ideological version of feminism[ref]Christina Hoff Sommers calls it gender feminism as opposed to equity feminism, and Steven Pinker describes it as “an empirical doctrine committed to three claims about human nature. The first is that the differences between men and women have nothing to do with biology but are socially constructed in their entirety. The second is that humans possess a single social motive—power—and that social life can be understood only in terms of how it is exercised. The third is that human interactions arise not from the motives of people dealing with each other as individuals but from the motives of groups dealing with other groups—in this case, the male gender dominating the female gender.”[/ref] that views human society through a Marxist-infused lens that emphasizes power struggles between groups and sees gender as socially constructed, they are locked into a paradigm where the mere fact that 80% of sci-fi writers are male (let alone Hugo nominees) is conclusive evidence of patriarchal oppression. From within that paradigm, there’s nothing left to talk about. Anybody who wants to have a discussion (other than to decide which tactics to use to smash the patriarchy) seems like an apologist for male domination. The social justice paradigm is a hammer that makes every single gender difference look like an evil nail.

So the chart isn’t as clear as it first appears. What you take from it depends entirely on your ideological framework. If you’re a social justice advocate, it’s a smoking gun proving conclusively that sci-fi is struggling bitterly to break free from the grip of the patriarchy. If you’re not a social justice advocate it might be evidence of systemic sexism in the sci-fi community that leads to a greater ratio of male writers or it might be evidence that more men like sci-fi than women. Or both. Or neither. It’s interesting, but it’s not conclusive.

Finding 3: Goodreads Scores vs. Hugo Nominations

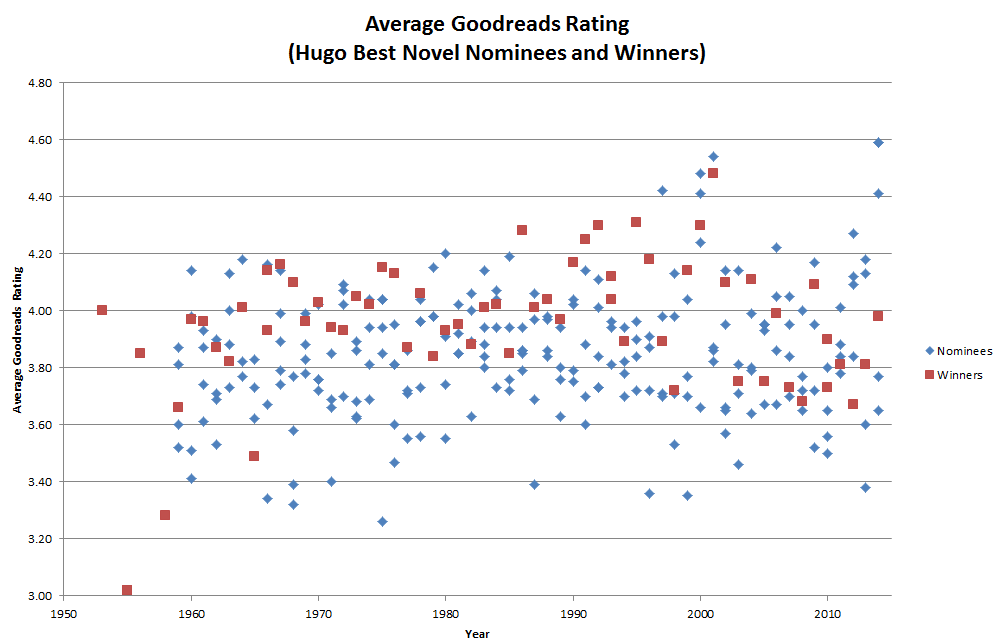

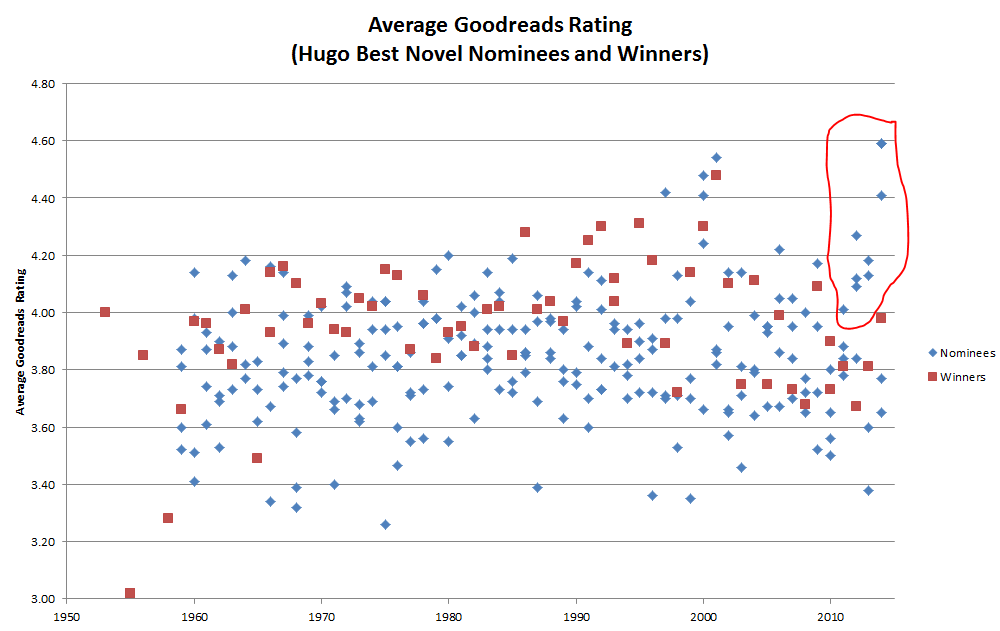

If the last chart depicted clearly the reasons why social justice warriors are so opposed to SP / RP, this chart depicts clearly the reasons why SP came into being in the first place. What it shows is the average Goodreads review for the Hugo best novel winners (in red) and nominees (in blue) for every year going back to the first Hugo awards awarded in 1953.[ref]Actually, I don’t have the nominees for some of the earliest years, which is why there are red squares but no blue diamonds at the far left end of the chart.[/ref] The most interesting aspect of the chart, from the standpoint of understanding where SP is coming from, is the fairly extreme gap between the scores of the nominees and the winners in the last few years, with the nominees showing much higher scores than the winners. Here it is again, with the data points in question circled:

Let me be clear about what I think this shows. It does not show that the last few Hugo awards are flawed or that recent Hugo winners have been undeserving. There is no law written anywhere that says that average Goodreads score is the objective measure of quality. That is not my point. All those data points show is that there has been a significant difference of opinion between the Hugo voters who picked the winners and the popular opinion. What’s more, they shows that this gap is a relatively recent phenomenon. Go back 10 or 20 years and the winners tend to cluster near the top of the nominees, showing that the Hugo voting process and the Goodreads audience were more or less in tune. But starting a few years ago, a chasm suddenly opens up.

Of course there have been plenty of years in the past where Goodreads ranked a losing finalist higher than the Hugo winner, but rarely have there been so many in a row and particularly so many in a row with such wide gaps. To a Sad Puppy proponent, this chart is just as much a smoking gun as the previous one because it shows that something has changed in just the last few years that has led to a significant divergence between the tastes reflected by the Hugo awards and the tastes of the sci-fi audience at large. Whether you chalk it up to a social clique, political ideology, secret conspiracy theories, or just plain old herd mentality, it looks like the Hugo awards and popular taste have parted ways. Which, when Correia and Torgersen talk about eltiism and insularity, is exactly the central accusation that the Sad Puppies folks are making.

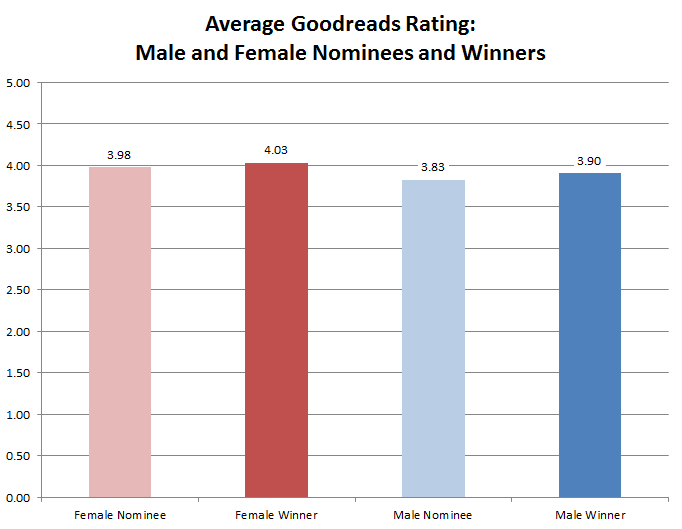

Just as with the prior chart, however, closer inspection complicates the picture. First, a social justice advocate may very well reply to the chart by saying, “Gee… lots of women get nominated and win and then review scores go down for nominees and winners. Sexism, much?” Turns out that isn’t likely, however, because Goodreads readers tended to rate female authors higher than male authors (at least within the sample of Hugo nominees and winners).

If anything, it suggests the possibility of mild sexism within the WorldCon community since it could indicate that female writers have to achieve higher popularity in order to get nominated and win. I didn’t run any statistical tests to see if the differences were significant, however, so let’s set that aside for the time being. The point is, blaming the low scores of Hugo winners vs. nominees over the last year on sexist Goodreads reviewers is a non-starter. It’s also worth pointing out that the winner scores haven’t suddenly gotten lower just over the last few years while the proportion of female nominees has gone up. They’ve actually been in a long-term slump (relative to Goodreads ratings) going back to the early 2000s with an average of around 3.7 compared to the all-time average of 3.96. Meanwhile, a lot of the losing nominees have been off-the-charts popular with scores of 4.2 and above. This is bound to lead to some hard feelings and bitterness.

When there are this few data points it pays to start looking at individual instances, and this is where the picture does start to get a little complicated. The most recent winner is Ann Leckie for Ancillary Justice. The rating of that book is 3.98 vs. the books with much higher ratings: Larry Correia’s Warbound

(4.41 with 3.6k ratings) and Robert Jordan / Brandon Sanderon’s Complete Wheel of Time series

(4.59 with just 376 ratings). Wheel of Time

is a special case because it was a nominee for an entire series of books. Only the most devoted fans are likely to leave a rating on the entire series, and that’s why there are so few ratings.[ref]Typical Hugo winners have 20,000 – 30,000 ratings.[/ref] It’s probably also why they are so high. A better approach would be to average the individual average ratings of the books in the series, but I haven’t taken the time to do that. In any case, Wheel of Time

is suspect as a comparison for that year. That leaves us with Warbound

, but it’s a special case, too. Larry Correia drew a lot of fire that year for SP2, and he had no realistic chance of winning no matter how good his book was as a result. Fair or unfair as that might be, it means we can’t really conclude anything by comparing his book with Leckie’s. Take those two out, and Leckie was the highest-rated nominee. With a score of 3.98, her book was also right in line with the long-run average and significantly higher than the short-run average. After digging deeper, it’s really hard to shoehorn the 2014 results into the narrative of divergence between the Hugo winners and the general sci-fi audience.

But there is still a trend worth considering. Going back to 2013 and earlier a succession of fairly low-rated books won despite stiff competition from much more popular nominees. The 2013 and 2010 winners had some of the lowest reviews of the last half century, came last or second-to-last vs. the nominees for that year, and won out over nominees with significantly higher scores. Again: I am not making judgment call on those particular books. Merely pointing out how wide the gap is.

Another shortcoming of this approach is that I’m only comparing Hugo nominees vs. winners, and the Sad Puppies have been claiming that conservative writers can’t get on the ballot at all, not that they keep losing once they get there. The only way to really evaluate that claim would be to contrast the Hugo nominees and winners on the one hand vs. high-rated, eligible sci-fi books that never even made it onto the ballot. If most of the highest rated, eligible books made it onto the ballot in the past but more recently are being ignored, that would be strong evidence in favor of the Sad Puppies fundamental grievance. That analysis is possible to do, but gathering the data is trickier. I hope to be able to tackle it in the coming months.

Closing Thoughts

I still think that Sad Puppies have a legitimate point. Their goal was to get a few new faces out there who otherwise wouldn’t have been considered. I think that’s an admirable goal, and I think that there are some folks on the ballot today who (1) deserve to be there and (2) wouldn’t ever have gotten there without Sad Puppies. And I know that even some of the critics of SP3 agree with that assessment (because they told me so).

The critics of Sad Puppies have a couple of important points too, however. First: concern over gender representation is legitimate. Second: it’s tricky for the Sad Puppies to make their case without appearing to disparage the Hugo winners over the last few years (much as the folks on the SP3 slate are being disparaged even before we know who has won.) Combine that uncomfortable implication (even if unwarranted) with the fact that sweeping the ballot pushed a lot of deserving works out of consideration, and it’s justifiable for the critics to be, well, critical.

I hope that Sad Puppies continues, but I hope that they take steps to avoid hogging the whole ballot. They could recommend a lot more or a lot fewer folks per category. If they recommend 10 folks for best short story, for example, it forces possible voters to (1) read more sci-fi and (2) spread their votes around instead of voting en bloc. If they recommend 2 folks for best short story, any block voting will be confined to a narrow portion of the ballot. Either alternative is better than sweeping most or all of a ballot.[ref]It’s worth pointing out that I think nobody in SP had any clue that they would be this successful, and that their sweeping of the ballot was an accident this year.[/ref]

Finally, I’d like for some Sad Puppies folks to get together with some of their critics and see if they can hammer out their differences for the good of the awards and the community as a whole. I have to give props to Mary Robinette Kowal (very much not a Sad Puppy supporter) for being exemplary in this regard. She has called on folks on her side to knock it off with the death threats and the hate mail, and also has started a drive to get more people supporting WorldCon memberships so that they can vote as well. For his part, Larry Correia has stepped in to stop his supporters from attacking Tor as a publisher. These are all good signs, and I hope that more moderate voices can prevail. Especially because the radicals on both sides are the ones threatening to nuke the entire award system. Social justice warriors are campaigning for Noah Ward (get it?) to shut down Sad Puppies definitively. Meanwhile, Vox Day has already pledged that he would retaliate by trying to shut down the entire award system next year with a No Award campaign of his own for Rabid Puppies 2. Given the first observation in this post, such a threat should be taken seriously.

Sad Puppies 3 was a good idea, but the execution was lacking this year. The best solution for everyone is for the voters to read each book and vote according to quality, including No Award if that’s what they genuinely feel is the right vote based strictly on the quality of the stories. And it is also for SP4 to get out ahead and take steps to avoid repeating the ballot sweep next year as well as to continue to shore up support among moderates, liberals, and apolitical folks to try and depoliticize the entire discussion a little bit.

After all the anger and vitriol over the past couple of weeks, there’s still a way for good to come of this. At the very least, I dearly hope that the legacy of the Hugo awards can be preserved.